All products featured are independently chosen by us. However, SoundGuys may receive a commission on orders placed through its retail links. See our ethics statement.

Do you need a DAC?

If you’ve started down the rabbit hole of audiophile gear, you may have encountered some folks online imploring you to get a fancy digital-to-analog converter (DAC) to make your whole system sound better. But before you go racing off to figure out how much money you’re going to be spending, read this article first to see if you actually need one. Chances are, you don’t.

Editor’s note: this article was updated on July 31, 2024, to improve interlinking and adjust language to reflect current conditions.

What is a DAC?

A DAC simply converts a digital audio signal into an analog one so that you can play the sound over headphones or speakers. It’s that simple! DAC chips are found in your audio source component, whether it’s a laptop, a portable music player, or a smartphone, though the analog headphone jack seems to be a dying feature (thanks to Apple).

A DAC simply converts a digital audio signal into an analog one so that your headphones can then create sound.

Much like headphone amplifiers, standalone DACs came about as a response to poor audio quality at the consumer level. High-end headphones and speakers could reveal source components, their DACs, and output stages as the weakest links in the audio chain. This became particularly apparent when consumers started using their PCs as an audio source in the 1990s. Sometimes the DAC would have poor filtering, would be improperly shielded — introducing noise — or the power supply might be poorly regulated, impacting the quality of the rendered output.

But digital audio has come a long way since then. Better tech has made shortcomings of even the cheapest chips basically inaudible, and consumer audio has exploded in quality past the point of diminishing returns. Where it used to be true that your digital Walkman or laptop’s internal DAC chip wouldn’t be suitable for high-bitrate listening, there are plenty of portable devices nowadays that can keep up just fine.

When do I need a DAC?

The main reason you’d get a new DAC today is that your current system — be it your computer, smartphone, or home system — has noticeable noise, objectionable distortion or artifacts, or is incapable of operating at the bitrate of your audio files. If you already have an external DAC and are running into any of those issues, you should try troubleshooting before buying something new.

Otherwise, if you’ve convinced yourself that your existing DAC is the limiting factor in your playback system and that upgrading it will yield a worthwhile improvement, that might also be considered a reason to splurge. This would also fall under the category of “looking for something to spend money on.”

Because DACs are a largely spec-driven item, you can almost always pick out the one you need simply by looking at the details online. FiiO makes plenty good products for cheap, and if you want an amplifier to go along with the DAC so you never have to worry about that either, the E10K is a solid pick for under $100 USD. You could also decide to throw money at the problem by picking up an ODAC or O2 amp + ODAC combo. But seriously, don’t sink too much money into this; spend your money on music instead.

How does a DAC work?

The job of the DAC is to take digital samples that make up a stored recording and turn it back into a nice continuous analog signal. To do that, it needs to translate the bits of data from digital files into an analog electrical signal at thousands of set times per second, otherwise known as samples. The unit then outputs a wave that intersects all those points. Now, because DACs aren’t perfect, sometimes this isn’t done perfectly. These imperfections manifest as jitter, high-frequency mirroring, narrow dynamic range, and limited bitrate.

Before launching into the nuts and bolts of how everything works, you need to know three terms: bitrate, bit depth, and sample rate. Bitrate simply refers to how much data is expressed per second. Sample rate refers to how many samples of data there are per second, and bit depth refers to how much data is recorded per sample.

What is jitter?

I’ll preface this section just like I addressed it in the audio cable myths article: Jitter is mostly a theoretical problem at this point, and extremely unlikely to rear its head in any equipment made in the last decade. However, it’s still useful to know what it is and when it might be an issue, so let’s dive in.

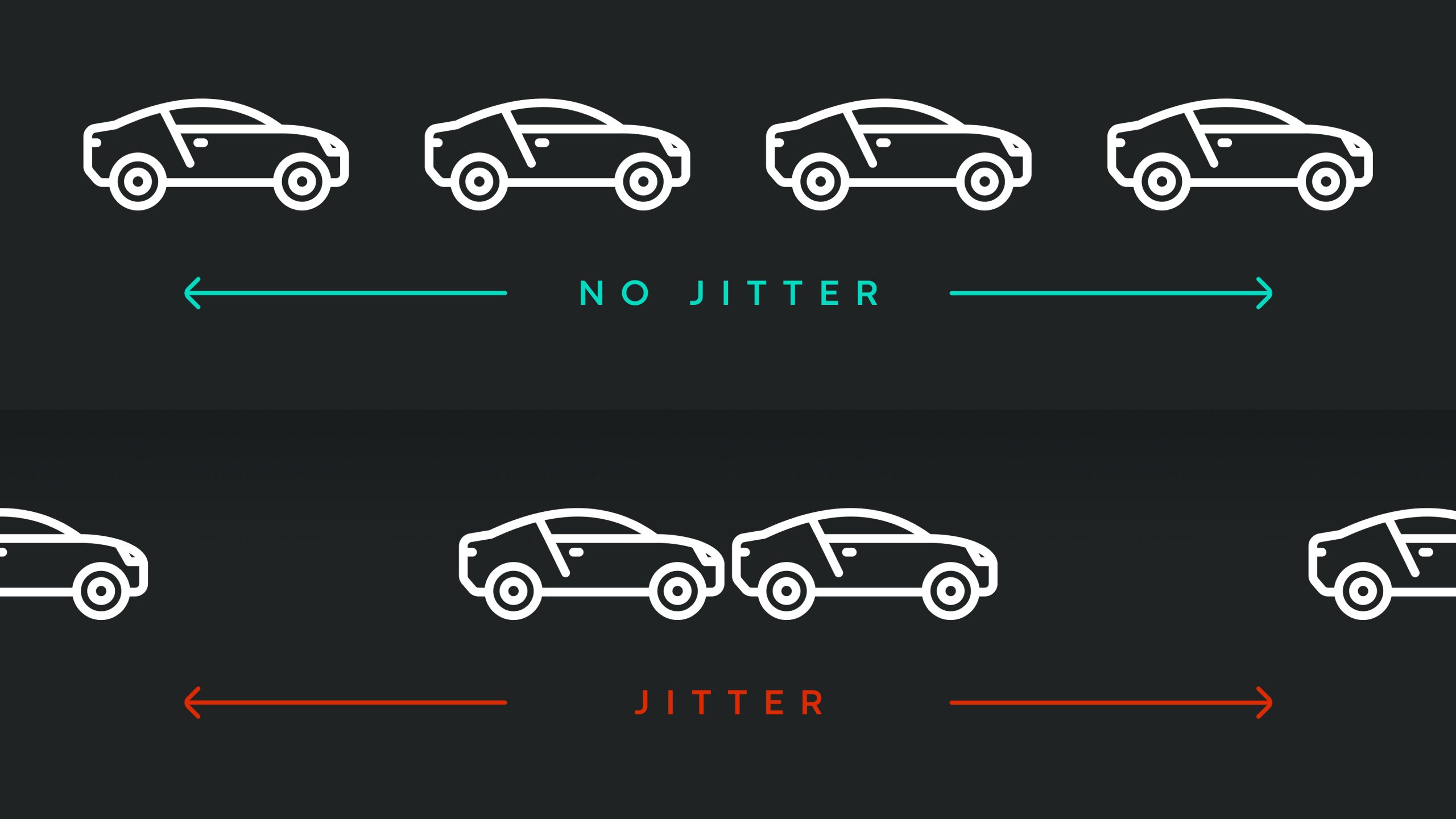

Jitter is a phenomenon that occurs when the clock, which tells the DAC when to convert each sample, isn’t as precise as it should be. This means the spaces between each converted sample aren’t the same, as in the illustration above. When the sample points aren’t being converted when they should, this can lead to inaccuracies in the playback waveform causing slight pitch errors. The higher the note that’s being reproduced, the more significant the pitch error.

You're unlikely to encounter noticeable jitter if you have somewhat modern hardware.

However it should be pointed out that this is another one of those problems that isn’t as common anymore because DAC units of today are so much better than those of the past. Even objectively, jitter tends to only have any impact at super-high frequencies because those notes have the shortest wavelengths. However, what makes high-frequency sounds more susceptible to this type of error also makes them less likely to be heard: no human can hear near the maximum limits of DAC file decoding, and it’s not close.

What is aliasing?

Aliasing occurs when a set of sampled data points can be misinterpreted when less than two samples exist per cycle. Aliasing only happens when you sample a signal (during either analog to digital conversion in an ADC or in digital downsampling) and refers to errors in signal spectrum due to sampling below the Nyquist rate.

Aliasing doesn’t happen at the output of a DAC. If there isn’t a proper lowpass reconstruction (aka interpolation) filter at the output of the DAC, then there will be images of the original signal spectrum repeating at multiples of the DAC output frequency. These image spectrums caused by high-frequency mirroring can create intermodulation distortion in the audible signal, if not properly filtered out.

What are bit depth and dynamic range?

What governs the theoretical limits of the dynamic range of an audio file is the bit depth. Basically, every single sample (discussed above) contains information, and the more information each sample holds, the more potential output values it has. In layman’s terms, the greater the bit depth, the wider the range of possible note volumes there are. A low bit depth — either at the recording stage or in the file itself — will necessarily result in low dynamic range, making many sounds incorrectly emphasized (or muted altogether). Because there are only so many possible loudness values inside a digital file, it should follow that the lower the bit depth, the worse the file will sound. So the greater the bit depth, the better, right?

Well, this is where we run into the limits of human perception once again. The most common bit depth is 16, meaning: for every sample, there’s a possible 16 bits of information, or 65,536 integer values. In terms of audio, that’s a dynamic range of 96.33dB. In theory, that means that no sound less than 96dB down from peak level should get lost in the noise.

While that may not sound terribly impressive, you really need to think hard about how you listen to music. If you’re like me: that comes from headphones 99+% of the time, and you’ll be listening to your music at a volume much lower than that. For example, I try to limit my sessions to about 75dBSPL so I don’t cook my ears prematurely. At that level, added dynamic range isn’t going to be perceptible, and anyone telling you otherwise is simply wrong. Additionally, your hearing isn’t equally-sensitive across all frequencies either, so your ears are the bottleneck here.

While I'm a super big crank when it comes to silly-ass excesses in audio tech, this is one point I'm forced to concede. However, the necessity of 24-bit files for casual listeners is dramatically overstated.

So why do so many people swear by 24-bit audio when 16-bit is just fine? Because that’s the bit depth where there theoretically shouldn’t be any problems ever for human ears. If you like to listen to recordings that are super quiet (think, orchestral music) — and you need to really crank the volume for everything to be heard — you need a lot more dynamic range than you would with an over-produced, too-loud pop song would in order to be heard properly. While you’d never crank your amp to produce 144dB(SPL) peaks, 24-bit encoding would allow you to approach that without the noise floor on the recording becoming an issue.

Additionally, if you record music, it’s always better to record at a high sample rate, and then downsample, instead of the other way around. That way, you avoid having a high-bitrate file with low-bitrate dynamic range, or worse: added noise. While I’m a super big crank when it comes to silly-ass excesses in audio tech, this is one point I’m forced to concede. However, the necessity of 24-bit files for casual listeners is dramatically overstated.

What’s a good bitrate?

While bit depth is important, what most people are familiar with in terms of bad-sounding audio is either limited bitrate, or aggressive audio data compression. Ever listen to music on YouTube, then immediately notice the difference when switching to an iTunes track or high-quality streaming service? You’re hearing a difference in data compression quality.

If you’ve made it this far, you’re probably aware that the greater the bit depth is, the more information the DAC has to convert and output at once. This is why bitrate — the speed at which your music data is decoded — is somewhat important.

320kbps is perfectly fine for most applications... and truth be told most people can't tell the difference.

So how much is enough? I usually tell people the 320kbps rate is perfectly fine for most applications (assuming you’re listening to 16-bit files). Hell, it’s what Amazon uses for its store, and truth be told most people can’t tell the difference. Some of you out there like FLAC files — and that’s fine for archival purposes — but for mobile listening? Just use a 320kbps MP3 or Opus file; audio compression has improved leaps and bounds in the last 20 years, and newer compression standards are able to do a lot more with a lot less than they used to. A low bitrate isn’t an immediate giveaway that your audio will be bad, but it’s not an encouraging sign.

If you’ve got space to spare, maybe you don’t care as much how big our files are — but smartphones generally don’t all come with 128GB standard… yet. But if you can’t tell the difference between a 320kbps MP3 and a 1400+kbps FLAC, why would you fill 45MB of space when you could get away with 15MB?

FAQ

The LS50W has a great DAC built in, we suggest you use that instead! You just need an optical connection from your PC.

If the USB DAC includes a headphone amplifier with a decent power output, then yes, it will help drive your headphones properly to get the most out of them. But it’s the amplifier that’s the important part in your situation. A standalone amplifier would also get the job done.

Yes, using the DAC in your receiver will give you great audio from your CD transport. Although theoretically the coax and optical connections should be the same, the optical cable is generally considered to be a cleaner connection as it electrically isolates the two components.

On some older phones: sure. But the errors and noise that you’re likely to come across in this situation will be minor at best — not really something that you’ll hear in the din of a commute, or wherever else you go with your smartphone as your main source of music.

No. Do not buy a DAC for Bluetooth headphones, as they will already have a DAC chip inside to handle converting the digital signal to an analog one to send to the headphone’s drivers. A second DAC would be redundant.

Nope!