All products featured are independently chosen by us. However, SoundGuys may receive a commission on orders placed through its retail links. See our ethics statement.

SoundGuys content and methods audit 2020

If you’re a regular reader of SoundGuys, you’ve probably noticed that we like to keep things as direct and unimpeachable as possible. However, we’re a collection of human beings—and we often screw up. Every so often I go through the way we do things to see if there’s any improvements that we can make, and if so: implement them. However, I thought it would be helpful for superfans and casual Googlers alike to see how we handle our work with things go awry.

Whether you’re a veteran review-junkie or someone just looking for the best product to buy, it can be very comforting to see a review with high ratings on Amazon or in print. Surely, you might say, that high score means this is the best product for me to buy! Unfortunately, that’s not always the case (and don’t call me Shirley).

The thing is: data can only tell you so much, and there’s often a piece of the puzzle missing when objective data is used to determine the “best” product in any category. You can’t just rely on a number to tell you everything you need to know about any product out there, simply because the only thing that matters is how you’ll like something. That’s why we spend so much time on our reviews to contextualize everything we’ve found with a set of headphones, earphones, or speakers.

Editor’s note: we have published our audit for 2021, which can be found here.

Scoring audit: our scores diverge from user scores

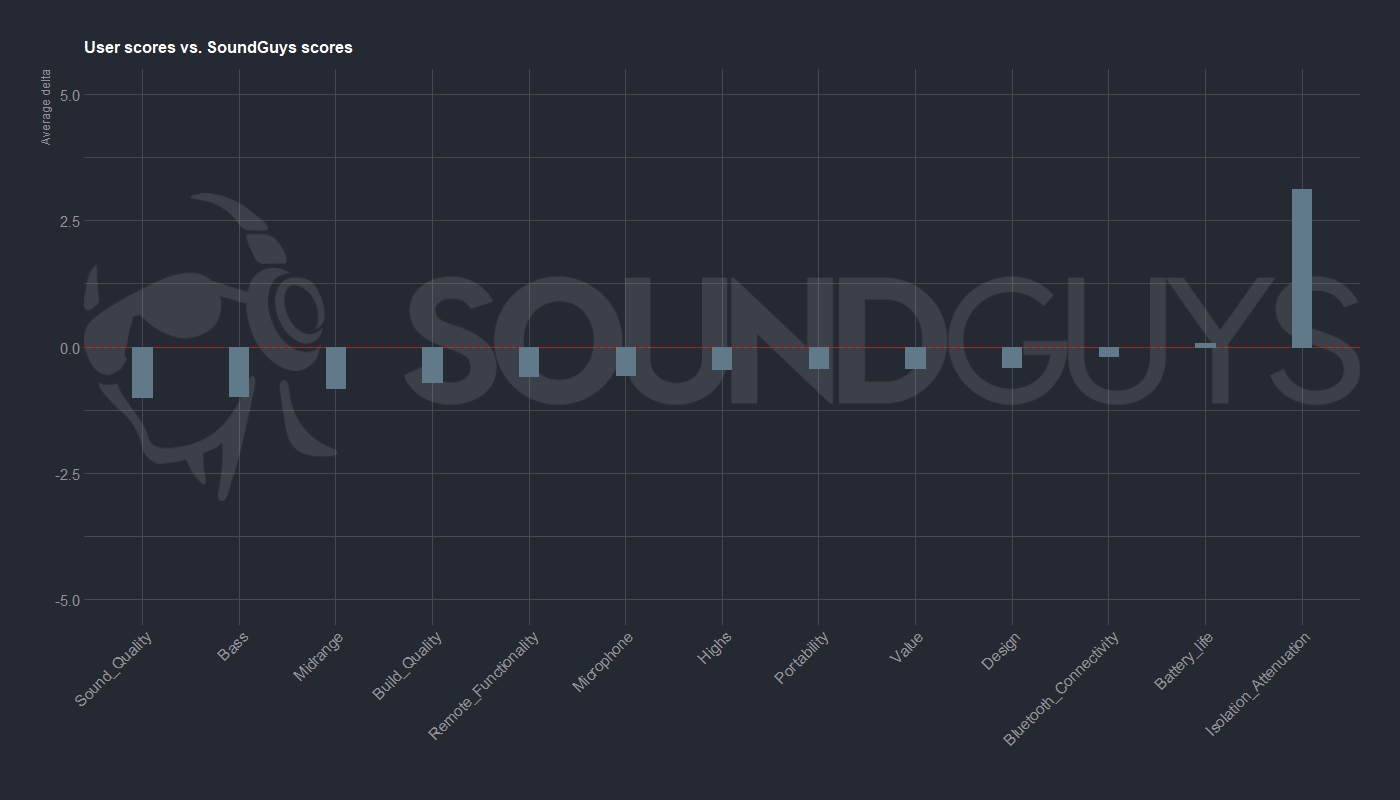

After scraping all scores for all reviews on our site, we wanted to see how well we approximated value judgments for different aspects of products’ performance. I personally was looking for a variance of under 1.5 points in either direction, and we hit that—with one notable exception.

Users rate isolation/attenuation much higher than we do

I’m not surprised by this result, as it’s one of those things that we fundamentally disagree with our readers on, and probably always will. The way we rate noise attenuation is built to reward both headphones and active noise cancelers on the same scale, so that way you can directly compare the effectiveness of all headphones to one another.

Because many ANC headsets offer better noise attenuation by a couple orders of magnitude, making a fair scoring scheme is difficult, and it doesn’t fit the mental models of many people who are only comparing headphones of a certain type. While many people will rate headphones that block out a bunch of noise highly, it might not be taking how well ANC headphones perform at the same task into account. Consequently, we couldn’t make an isolation/attenuation score for each product category because we then couldn’t directly compare products across categories.

Users rate sound quality lower than we do

![Beyerdynamic DT 990 Pro[G] A photo of the Beyerdynamic DT 990 PRO being worn by writer Adam Molina.](https://www.soundguys.com/wp-content/uploads/2017/11/Beyerdynamic-DT-990-ProG.jpg)

The other anomaly in our scoring is that our users are pretty much universally more negative on sound quality than we are. While that may be because the very nature of human ears means people disagree on a lot, I wasn’t expecting the near-universal dip in scores compared to ours.

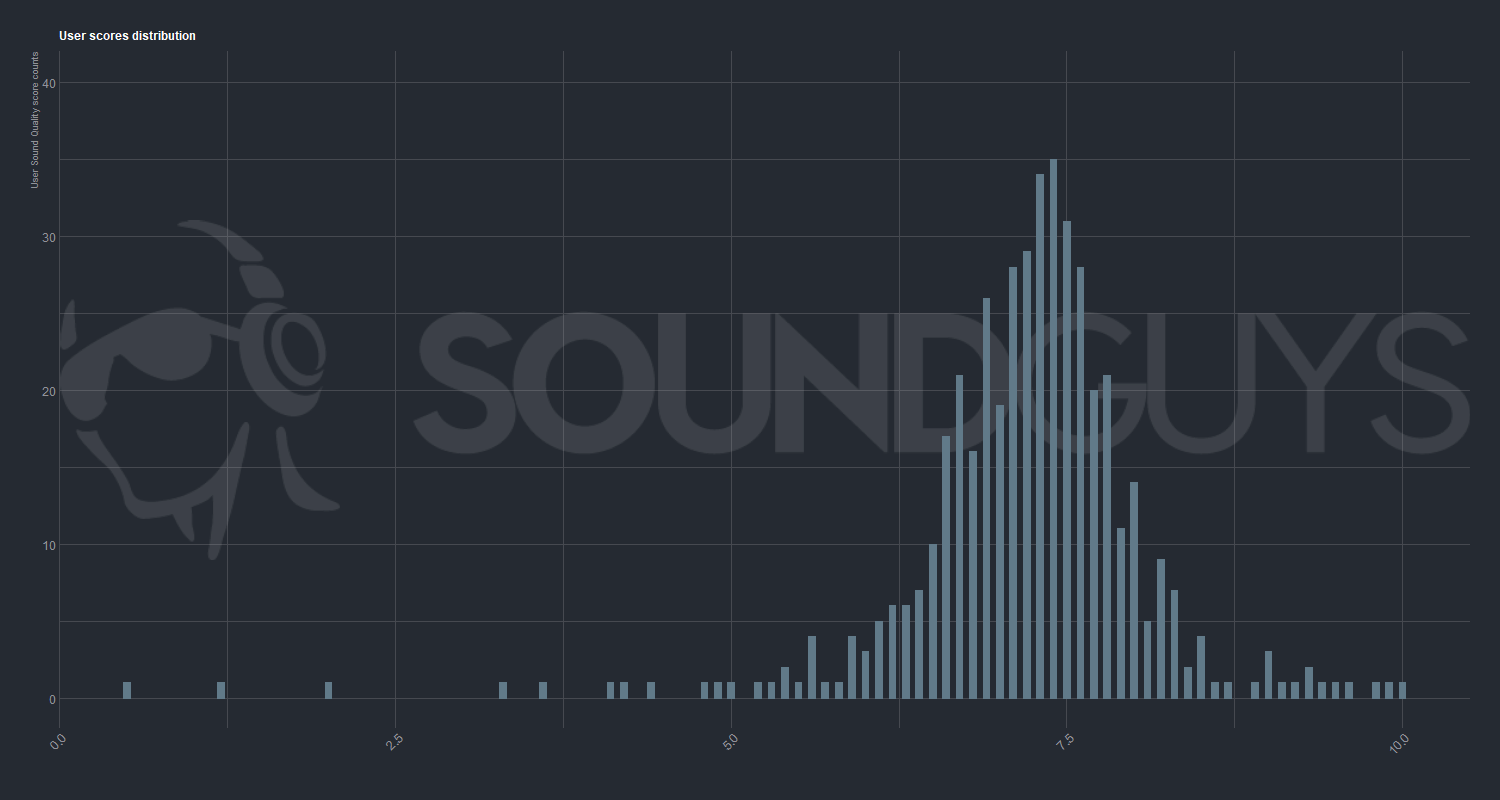

While it’s not a huge difference, it’s still a difference—and that’s worth exploring. So I plotted all the counts of user scores for sound quality and:

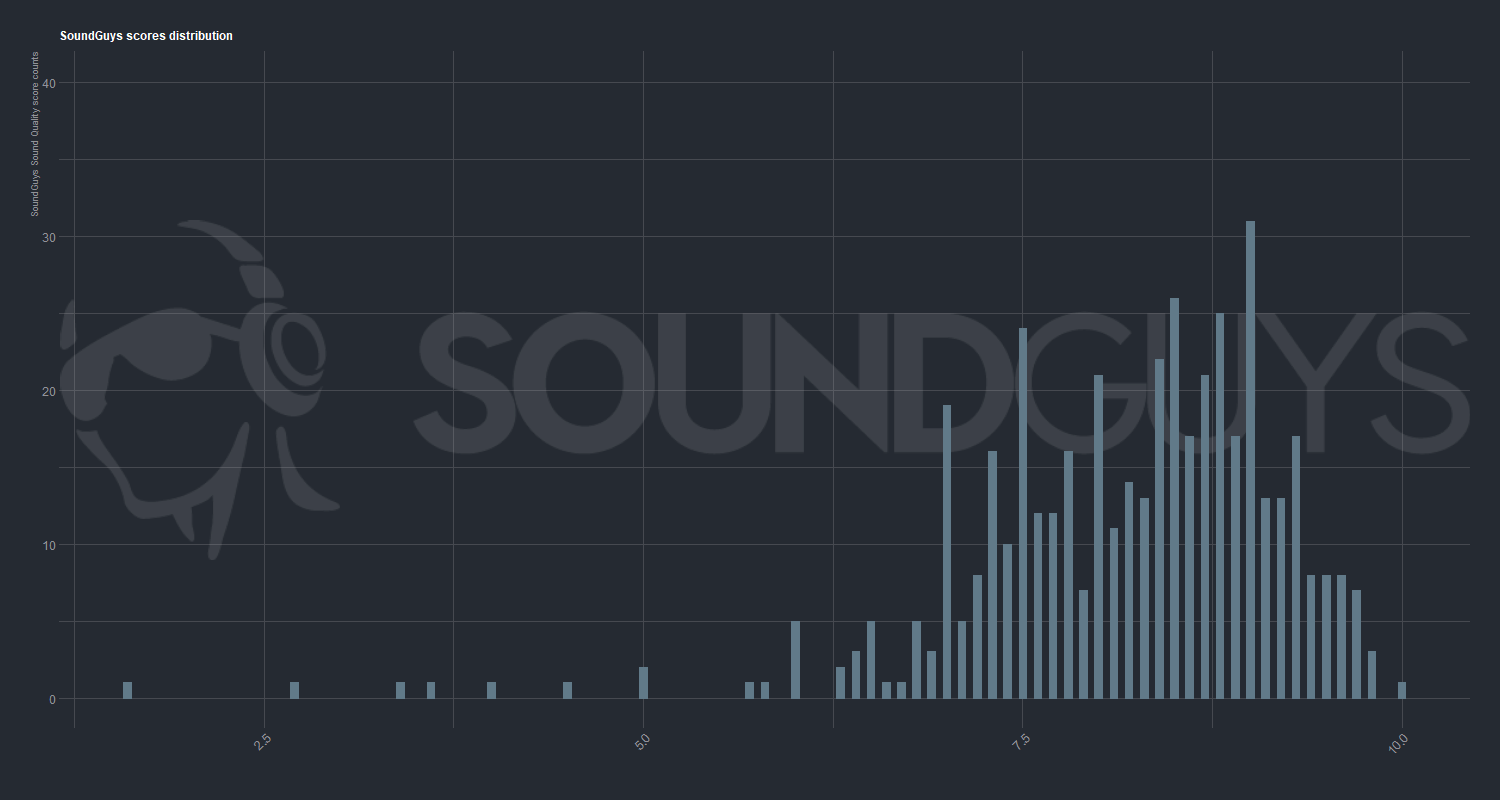

A normal distribution (like the one above) around 7.5 is something you’d expect with complete coverage of a single category of headphones, but it’s a little strange that it worked out this way. I say this because the total breadth of coverage on SoundGuys tends to avoid both the upper and lower extremes of the headphones available, so our review selection is a little more curated. In that case, you wouldn’t necessarily expect scores to look like a nice, neat bell curve. If you were to try to review the top options of any industry, the scoring distribution would probably look a less pretty, like the SoundGuys house scoring:

While that doesn’t tell us anything out of the gate, it’s possible that we should re-examine how we score sound quality, because even though most of the scores are well separated: they gravitate more toward the top of the scale than I’d like to see. Though it’s possible that most headphones nowadays are approaching the point where their sound quality is a settled issue—I don’t believe it. Not yet, anyway. We’re going to look to dial in these scores a bit more, but the good news is we have all of the test data on hand to do this. The big thing we have to do is make new scoring algorithms.

Proposed remedy: revisit scores for sound quality. Expand testing.

Be sure to check back as we explore new methods of scoring and analysis. While it’s tempting to acknowledge the difference and move on, we’re going to test a few things behind the scenes to hopefully bridge the gap between our readers and our own analysis. We feel like any excuse to improve our data collection is worth it.

Wrapping up

I do periodic audits of our methods and content, and this one was brewing for some time. Most of our slowness on some of these issues stems from needing a large corpus of data to analyze, and our readers have been very generous with their own opinions and assessments! We’re very appreciative of our readers’ help in grappling with a useful scoring system.

If you want to see changes, feel free to reach out on our contact page, or even just leave a question on our FAQ at the bottom of every article we have. While I can’t promise we’ll ever be perfect, I can promise that we’ll always try to do better than we did previously… and that’ll be true for as long as we’re around.

Happy listening.